Why use Matrix multiplications instead of convolution operations for convolution kernels in Deep Learning?

Researchers from fotran days have spent years optimizing GEMM libraries.GEMM libraries used in caffe are generalised matrix multiplication libraries that allow matrix multiplication operations.So it could be better to apply these instead of normal convolution operations.

Another way is Fourier Transforms but things become complicated when you start adding strides for convolutions.

So how do you convert convolution to Matrix multiply?

|

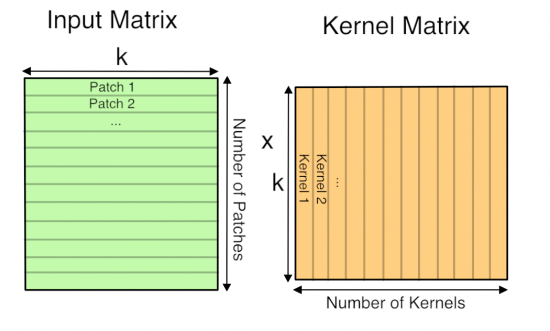

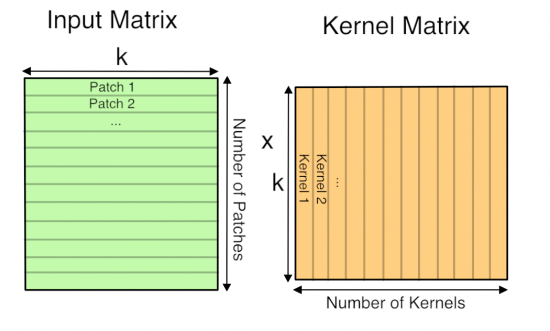

| each path of input 2d/3d feature becomes a row |

|

| A kernel in rolled into a column and stacked column wise |

|

|

MAKES SENSE!!!!